Snowflake Integration¶

Overview¶

This document describes how to use the external function feature of Snowflake to invoke Driverless AI models as HTTP REST API endpoints.

Using the external function requires some setup and configuration in Snowflake and Amazon. For more information, refer to the Snowflake documentation on external functions:

https://docs.snowflake.com/en/sql-reference/external-functions-introduction.html

Requirements¶

Snowflake login credentials

Amazon EC2 login credentials

Driverless AI MOJO (pipelineSF.mojo)

Included in the demo files

DAIMojoRestServer

Included in the demo files

Driverless AI license

Provided through the partnership portal

Copy the license to the Snowflake_H2Oai directory. Name the file license.sig.

Java JDK 1.8

An open source JDK is included in the demo zip file and the demo scripts use that as the default. To use a different JVM, see the following steps:

Execute “java -version” to verify the available JVM on your platform has JDK 1.8 installed. The output of the command should indicate JDK 1.8, for example:

java -version openjdk version "1.8.0_121"

If the output does not show JDK 1.8, download a 1.8 JDK for your environment from one of the following sites:

Security¶

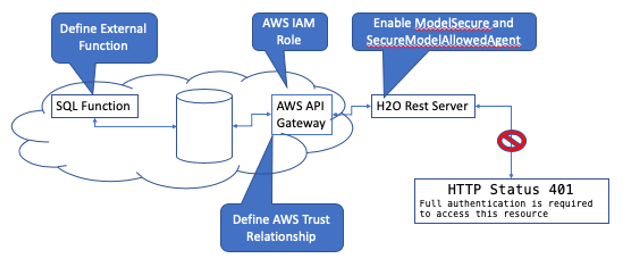

When using the external function, a call is made from Snowflake to the AWS API Gateway. This requires the configuration of trust relationships in AWS so that the call can be made.

The H2O REST Server only accepts calls from the AWS Gateway endpoint. When the parameter -DSecureModelAllowAgent=”AmazonAPIGateway.*|snowflake.*” is added to the command line, it’s even possible to further limit this to a specific AWS function.

Enabling -DModelSecureEndPoints=/** protects the Rest Server by requiring full authentication, effectivity blocking requests.

Installation¶

The following installation includes steps in Snowflake, AWS, and an EC2 instance where the H2O REST server is installed.

The following steps outline the REST server installation:

Create an EC2 Instance, a demo system should have the following minimum specification:

Operating System: Linux

CPU: 2

Memory: 16GB

Disk: 500MB

Note: For demos where larger datasets may be used, consider using more cores and larger memory sizes. For example, using 1 million rows, 48 cores, and 64GB results in much lower latency that a 2 core system.

Copy the distribution to the EC2 instance and extract the file.

unzip Snowflake_H2Oai_v1.zip

Create the database.

Populate the table with the sample data.

Verify that the data is available.

Starting the REST Server¶

Use the following steps to start the H2O REST server on the EC2 instance.

Ensure the current working directory is Snowflake-H2Oai/Function.

cd Snowflake-H2Oai/Function ./goServer.sh

Press ENTER to background the program. The log is written to nohup.log.

The REST server initiates after several seconds have passed. Check for a ready message similar to the following:

Started DaiMojoRestServer4Application in 4.778 seconds (JVM running for 6.22)

Verify REST Server Installation¶

To verify that the REST server and its model components were installed successfully and that the server initialized correctly:

Ensure that the current working directory is Snowflake-H2Oai/Function

Run the following script from a separate terminal window:

./goVerifyEndPoint.sh { "data":[[1,0.7779838]]}If the response is similar to the output from this example, the REST server, model, and license have been installed correctly.

Stopping the REST Server¶

To stop the H2O REST server on the EC2 instance, run the following commands:

cd Snowflake-H2Oai/Function ./stopServer.sh

External Function Example¶

The following is an example of an external function:

create or replace api integration demonstration_external_api_integration_01 api_provider=aws_api_gateway api_aws_role_arn='arn:aws:iam::xxxxxxxxxx:role/snowflake' api_allowed_prefixes=('https://aaaaaaaa.execute-api.us-east-1.amazonaws.com/MojoTest') enabled=true; create or replace external function H2OPredict(v varchar, v0 number, v1 varchar, v2 number, v3 number, v4 number, v5 number, v6 varchar, v7 varchar, v8 number, v9 number, v10 number, v11 number) returns variant api_integration = demonstration_external_api_integration_01 as 'https://aaaaaaaaa.execute-api.us-east-1.amazonaws.com/MojoTest';

Function Data Types¶

The preceding function passes 13 parameters (v to V11).

The number of parameters matches the number of parameters that the model was created with.

If the data in the table is a float and the function uses the

SQL Examples¶

Once the Snowflake and AWS Gateway has been configured, the following example SQL statements return predictions:

select H2OPredict('Modelname=pipelineSF.mojo’, LOAN_AMNT, TERM, INT_RATE, INSTALLMENT, EMP_LENGTH, ANNUAL_INC, VERIFICATION_STATUS, ADDR_STATE, DTI, DELINQ_2YRS, REVOL_BAL, REVOL_UTIL ) from LENDINGCLUB where ADDR_STATE='NJ' order by ID;

Passing Runtime Parameters¶

The following is a list of parameters used to pass specific values to the REST server:

Modelname: The name of the Driverless AI MOJO file that exists in the REST server ModelDirectory. This is pipeline.mojo by default.

Prediction: The numeric prediction to use. This is 0 by default.

Sample parameter usage:

select *, H2OPredict('Modelname=pipelineSF.mojo Prediction=0',LOAN_AMNT, TERM, INT_RATE, INSTALLMENT, EMP_LENGTH, ANNUAL_INC, VERIFICATION_STATUS,

ADDR_STATE, DTI, DELINQ_2YRS, REVOL_BAL, REVOL_UTIL ) from LENDINGCLUB;

Request: 10625, 36 months,6.62,326.23,4,33000,VERIFIED - income,WA,27.38,0,6290,46.3

Response: ["bad_loan.0 : 0.917305","bad_loan.1 : 0.08269503"]

0.917305

Advanced Setup¶

The Snowflake External Function allows custom HTTP headers to be defined. In the following example, the HTTP header modelname is added to the function and is defined as pipeline.mojo for all calls to this function.

create or replace external function H2OPredictHDR(v0 number, v1 varchar, v2 number, v3 number, v4 number, v5 number, v6 varchar, v7 varchar, v8 number, v9 number, v10 number, v11 number)

returns variant

HEADERS=('modelname' = 'pipelineSF.mojo')

api_integration = demonstration_external_api_integration_01

as 'https://zzzzzzzzz.execute-api.us-east-1.amazonaws.com/production';

This allows function calls to not require any parameters. A function by itself is enough for each model:

select id, H2OPredictHDR(LOAN_AMNT, TERM, INT_RATE, INSTALLMENT, EMP_LENGTH, ANNUAL_INC, VERIFICATION_STATUS,

ADDR_STATE, DTI, DELINQ_2YRS, REVOL_BAL, REVOL_UTIL ) from LENDINGCLUB;

The prediction can also be passed if required. Otherwise, a probability of 0 is returned.

Building Models¶

The Snowflake external function feature lets you build Driverless AI models from a Snowflake worksheet. When requesting Driverless AI to build a model from a worksheet, the build status is updated in a table called MODELBUILD so that the build can be monitored.

This shows the different stages for the riskmodel being built, from requesting the specific table in Snowflake to final deployment for scoring in the REST Server.

Note: When the build finishes, the build experiment UUID is reported for auditability purposes.

Define build function example:

create or replace external function H2OBuild(v varchar)

returns variant

api_integration = demonstration_external_api_integration_01

as 'https://tnes6j1juc.execute-api.us-east-1.amazonaws.com/production';

Define Snowflake Table¶

A Snowflake table is used to track the status of the model build that

Requesting a Build Example

Use the function H2OBuild to change the requesting parameters:

select H2OBuild('Build --Table=LENDINGCLUB2 --Target=BAD_LOAN --Modelname=custchurn.mojo') ;

For more information on the parameters to the build request, see the following table:

Parameter |

Optional |

Description |

|---|---|---|

Table |

no |

Defines which Snowflake table to use for the model build |

Target |

no |

The column (feature) name to use as the models target from training |

Modelname |

no |

The name the model will have when deployed |

Accuracy |

yes |

Model accuracy setting |

Time |

yes |

Model experiment time |

Interpretability |

yes |

Model interpretability setting |

User |

yes |

Username required to access Snowflake table |

Password |

yes |

Password required to access Snowflake table |

Warehouse |

yes |

Snowflake warehouse |

Database |

yes |

Snowflake database |

Schema |

yes |

Snowflake schema |

Deployment¶

Once the model has finished building, it is copied to the REST server and becomes available for the H2OPredict scoring function.

Configuration¶

The script buildmodel.py must be copied from the SnowFlake Function directory to the location where the REST server executes. By default, this is /home/ec2-user/Snowflake-H2Oai/Function.

Note: The script code must be updated based on the environment you are using.

Driverless AI Snowflake Configuration¶

The Driverless AI configuration uses the standard default settings except for settings related to user security. Use the authentication method that is best suited to the environment that you are using. For more information, see Using the config.toml File and Configuring Authentication.

authentication_method = "local"

local_htpasswd_file = "/home/ec2-user/dai-1.8.5.1-linux-x86_64/.htpasswd"

This resource must be secured from unauthorized access and use.

To create a username and password using local authentication:

sudo htpasswd -B -c .htpasswd snowflake

Password xxxxxxxxxxxx

Requirements¶

The build functionality invokes a Python program that uses the Driverless AI Python Client to create an experiment. The following packages must be available:

sudo yum install httpd

sudo yum install python3

sudo pip3 install /home/ec2-user/dai-1.8.5.1-linux-x86_64/python/lib/python3.6/site-packages/h2oai/ui/h2oai_client-1.8.5.1-py3-none-any.whl

sudo pip3 install –upgrade snowflake-connector-python

Sample Workbook¶

The following example shows how to use the functions once the initial setup has been completed.

Note: H2OBuild and H2OPredict must be defined for the following example to work.

Use the updates to the Snowflake table MODELBUILD to track each stage of the build.

SELECT * FROM MODELBUILD;

Using the Snowflake table LENDINGCLUB2, build a model to predict the BAD_LOAN column. Name the model riskmodel.mojo.

SELECT H2OBuild('Build --Table=LENDINGCLUB2 --Target=BAD_LOAN --Modelname=riskmodel.mojo') ;

Once the model is deployed, retrieve the suggest Snowflake function definition.

SELECT H2OBuild('Modelname= riskmodel.mojo Function');

The preceding function provides a suggested Snowflake SQL statement to call the model.

SELECT H2OBuild('Modelname= riskmodel.mojo SQL') ;

Use the Snowflake external function.

SELECT H2OPredict(LOAN_AMNT, TERM, INT_RATE, INSTALLMENT, EMP_LENGTH, HOME_OWNERSHIP, ANNUAL_INC, VERIFICATION_STATUS, ADDR_STATE, DTI, DELINQ_2YRS, INQ_LAST_6MNTHS, PUB_REC, REVOL_BAL, REVOL_UTIL, TOTAL_ACC ) from LENDINGCLUB;