Driverless AI MLI Standalone Python Scoring Package

This package contains an exported model and Python 3.11 source code examples for productionizing models built using H2O Driverless AI Machine Learning Interpretability (MLI) tool. This is only available for interpreted models and can be downloaded by clicking the Scoring Pipeline button on the Interpreted Models page.

The files in this package let you obtain reason codes for a given row of data in a couple of different ways:

From Python 3.11, you can import a scoring module and use it to transform and score on new data.

From other languages and platforms, you can use the TCP/HTTP scoring service bundled with this package to call into the scoring pipeline module through remote procedure calls (RPC).

MLI Python Scoring Package Files

The scoring-pipeline-mli folder includes the following notable files:

example.py: An example Python script demonstrating how to import and interpret new records.

run_example.sh: Runs example.py (This also sets up a virtualenv with prerequisite libraries.)

run_example_shapley.sh: Runs example_shapley.py. This compares K-LIME and Driverless AI Shapley reason codes.

tcp_server.py: A standalone TCP server for hosting MLI services.

http_server.py: A standalone HTTP server for hosting MLI services.

run_tcp_server.sh: Runs the TCP scoring service (specifically, tcp_server.py).

run_http_server.sh: Runs HTTP scoring service (runs http_server.py).

example_client.py: An example Python script demonstrating how to communicate with the MLI server.

example_shapley.py: An example Python script demonstrating how to compare K-LIME and Driverless AI Shapley reason codes.

run_tcp_client.sh: Demonstrates how to communicate with the MLI service via TCP (runs example_client.py).

run_http_client.sh: Demonstrates how to communicate with the MLI service via HTTP (using curl).

Quick Start

There are two methods for starting the MLI Standalone Scoring Pipeline.

Quick Start - Recommended Method

This is the recommended method for running the MLI Scoring Pipeline. Use this method if:

You have an air gapped environment with no access to the Internet.

You want to use a quick start approach.

Prerequisites

A valid Driverless AI license key.

A completed Driverless AI experiment.

Downloaded MLI Scoring Pipeline.

Running the MLI Scoring Pipeline - Recommended

Download the TAR SH version of Driverless AI from https://www.h2o.ai/download/.

Use bash to execute the download. This creates a new dai-nnn folder.

Change directories into the new Driverless AI folder.

cd dai-nnn directory.

Run the following to install the Python Scoring Pipeline for your completed Driverless AI experiment:

./dai-env.sh pip install /path/to/your/scoring_experiment.whl

Run the following command to run the included scoring pipeline example:

DRIVERLESS_AI_LICENSE_KEY="pastekeyhere" SCORING_PIPELINE_INSTALL_DEPENDENCIES=0 ./dai-env.sh /path/to/your/run_example.sh

Quick Start - Alternative Method

This section describes an alternative method for running the MLI Standalone Scoring Pipeline. This version requires Internet access.

Note

If you use a scorer from a version prior to 1.10.4.1, you need to add export SKLEARN_ALLOW_DEPRECATED_SKLEARN_PACKAGE_INSTALL=True prior to creating the new scorer python environment, either in run_example.sh or in the same terminal where the shell scripts are executed. Note that this change will not be necessary when installing the scorer on top of an already built tar.sh, which is the recommended way.

Prerequisites

Valid Driverless AI license.

The scoring module and scoring service are supported only on Linux with Python 3.11 and OpenBLAS.

The scoring module and scoring service download additional packages at install time and require internet access. Depending on your network environment, you might need to set up internet access via a proxy.

Apache Thrift (to run the scoring service in TCP mode)

Examples of how to install these prerequisites are below.

Installing Python 3.11 on Ubuntu 16.10 or Later:

sudo apt install python3.11 python3.11-dev python3-pip python3-dev \

python-virtualenv python3-virtualenv

Installing Python 3.11 on Ubuntu 16.04:

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt-get update

sudo apt-get install python3.11 python3.11-dev python3-pip python3-dev \

python-virtualenv python3-virtualenv

Installing Conda 3.6:

You can install Conda using either Anaconda or Miniconda. Refer to the links below for more information:

Miniconda - https://docs.conda.io/en/latest/miniconda.html

Installing the Thrift Compiler

Refer to Thrift documentation at https://thrift.apache.org/docs/BuildingFromSource for more information.

sudo apt-get install automake bison flex g++ git libevent-dev \

libssl-dev libtool make pkg-config libboost-all-dev ant

wget https://github.com/apache/thrift/archive/0.10.0.tar.gz

tar -xvf 0.10.0.tar.gz

cd thrift-0.10.0

./bootstrap.sh

./configure

make

sudo make install

Run the following to refresh the runtime shared after installing Thrift.

sudo ldconfig /usr/local/lib

Running the MLI Scoring Pipeline - Alternative Method

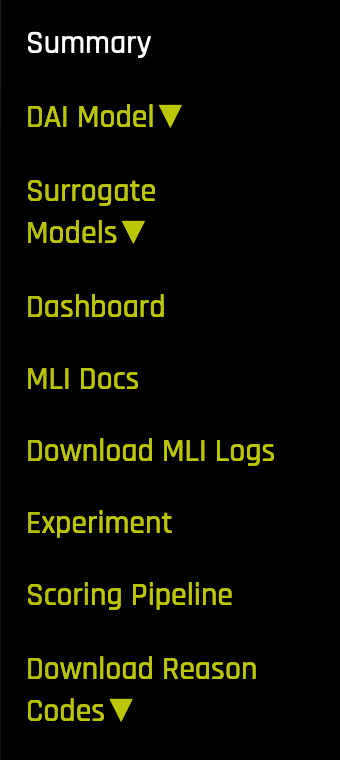

On the MLI page, click the Scoring Pipeline button.

Unzip the scoring pipeline, and run the following examples in the scoring-pipeline-mli folder.

Run the scoring module example. (This requires Linux and Python 3.11.)

bash run_example.shRun the TCP scoring server example. Use two terminal windows. (This requires Linux, Python 3.11 and Thrift.)

bash run_tcp_server.sh bash run_tcp_client.shRun the HTTP scoring server example. Use two terminal windows. (This requires Linux, Python 3.11 and Thrift.)

bash run_http_server.sh bash run_http_client.shNote: By default, the

run_*.shscripts mentioned above create a virtual environment using virtualenv and pip, within which the Python code is executed. The scripts can also leverage Conda (Anaconda/Mininconda) to create Conda virtual environment and install required package dependencies. The package manager to use is provided as an argument to the script.# to use conda package manager bash run_example.sh --pm conda # to use pip package manager bash run_example.sh --pm pip

MLI Python Scoring Module

The MLI scoring module is a Python module bundled into a standalone wheel file (name scoring_*.whl). All the prerequisites for the scoring module to work correctly are listed in the ‘requirements.txt’ file. To use the scoring module, all you have to do is create a Python virtualenv, install the prerequisites, and then import and use the scoring module as follows:

----- See 'example.py' for complete example. -----

from scoring_487931_20170921174120_b4066 import Scorer

scorer = KLimeScorer() # Create instance.

score = scorer.score_reason_codes([ # Call score_reason_codes()

7.416, # sepal_len

3.562, # sepal_wid

1.049, # petal_len

2.388, # petal_wid

])

The scorer instance provides the following methods:

score_reason_codes(list): Get K-LIME reason codes for one row (list of values).score_reason_codes_batch(dataframe): Takes and outputs a Pandas Dataframeget_column_names(): Get the input column namesget_reason_code_column_names(): Get the output column names

The process of importing and using the scoring module is demonstrated by the bash script run_example.sh, which effectively performs the following steps:

----- See 'run_example.sh' for complete example. -----

virtualenv -p python3.11 env

source env/bin/activate

pip install --use-deprecated=legacy-resolver -r requirements.txt

python example.py

K-LIME vs Shapley Reason Codes

There are times when the K-LIME model score is not close to the Driverless AI model score. In this case it may be better to use reason codes using the Shapley method on the Driverless AI model. Note that the reason codes from Shapley will be in the transformed feature space.

To see an example of using both K-LIME and Driverless AI Shapley reason codes in the same Python session, run:

bash run_example_shapley.sh

For this batch script to succeed, MLI must be run on a Driverless AI model. If you have run MLI in standalone (external model) mode, there will not be a Driverless AI scoring pipeline.

If MLI was run with transformed features, the Shapley example scripts will not be exported. You can generate exact reason codes directly from the Driverless AI model scoring pipeline.

MLI Scoring Service Overview

The MLI scoring service hosts the scoring module as a HTTP or TCP service. Doing this exposes all the functions of the scoring module through remote procedure calls (RPC).

In effect, this mechanism lets you invoke scoring functions from languages other than Python on the same computer, or from another computer on a shared network or the internet.

The scoring service can be started in two ways:

In TCP mode, the scoring service provides high-performance RPC calls via Apache Thrift (https://thrift.apache.org/) using a binary wire protocol.

In HTTP mode, the scoring service provides JSON-RPC 2.0 calls served by Tornado (http://www.tornadoweb.org).

Scoring operations can be performed on individual rows (row-by-row) using score or in batch mode (multiple rows at a time) using score_batch. Both functions let you specify pred_contribs=[True|False] to get MLI predictions (KLime/Shapley) on a new dataset. See the example_shapley.py file for more information.

MLI Scoring Service - TCP Mode (Thrift)

The TCP mode lets you use the scoring service from any language supported by Thrift, including C, C++, C#, Cocoa, D, Dart, Delphi, Go, Haxe, Java, Node.js, Lua, perl, PHP, Python, Ruby and Smalltalk.

To start the scoring service in TCP mode, you will need to generate the Thrift bindings once, then run the server:

----- See 'run_tcp_server.sh' for complete example. -----

thrift --gen py scoring.thrift

python tcp_server.py --port=9090

Note that the Thrift compiler is only required at build-time. It is not a run time dependency, i.e. once the scoring services are built and tested, you do not need to repeat this installation process on the machines where the scoring services are intended to be deployed.

To call the scoring service, generate the Thrift bindings for your language of choice, then make RPC calls via TCP sockets using Thrift’s buffered transport in conjunction with its binary protocol.

----- See 'run_tcp_client.sh' for complete example. -----

thrift --gen py scoring.thrift

----- See 'example_client.py' for complete example. -----

socket = TSocket.TSocket('localhost', 9090)

transport = TTransport.TBufferedTransport(socket)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = ScoringService.Client(protocol)

transport.open()

row = Row()

row.sepalLen = 7.416 # sepal_len

row.sepalWid = 3.562 # sepal_wid

row.petalLen = 1.049 # petal_len

row.petalWid = 2.388 # petal_wid

scores = client.score_reason_codes(row)

transport.close()

You can reproduce the exact same result from other languages, e.g. Java:

thrift --gen java scoring.thrift

// Dependencies:

// commons-codec-1.9.jar

// commons-logging-1.2.jar

// httpclient-4.4.1.jar

// httpcore-4.4.1.jar

// libthrift-0.10.0.jar

// slf4j-api-1.7.12.jar

import ai.h2o.scoring.Row;

import ai.h2o.scoring.ScoringService;

import org.apache.thrift.TException;

import org.apache.thrift.protocol.TBinaryProtocol;

import org.apache.thrift.transport.TSocket;

import org.apache.thrift.transport.TTransport;

import java.util.List;

public class Main {

public static void main(String[] args) {

try {

TTransport transport = new TSocket("localhost", 9090);

transport.open();

ScoringService.Client client = new ScoringService.Client(

new TBinaryProtocol(transport));

Row row = new Row(7.642, 3.436, 6.721, 1.020);

List<Double> scores = client.score_reason_codes(row);

System.out.println(scores);

transport.close();

} catch (TException ex) {

ex.printStackTrace();

}

}

}

Scoring Service - HTTP Mode (JSON-RPC 2.0)

The HTTP mode lets you use the scoring service using plaintext JSON-RPC calls. This is usually less performant compared to Thrift, but has the advantage of being usable from any HTTP client library in your language of choice, without any dependency on Thrift.

For JSON-RPC documentation, see http://www.jsonrpc.org/specification .

To start the scoring service in HTTP mode:

----- See 'run_http_server.sh' for complete example. -----

python http_server.py --port=9090

To invoke scoring methods, compose a JSON-RPC message and make a HTTP POST request to http://host:port/rpc as follows:

----- See 'run_http_client.sh' for complete example. -----

curl http://localhost:9090/rpc \

--header "Content-Type: application/json" \

--data @- <<EOF

{

"id": 1,

"method": "score_reason_codes",

"params": {

"row": [ 7.486, 3.277, 4.755, 2.354 ]

}

}

EOF

Similarly, you can use any HTTP client library to reproduce the above result. For example, from Python, you can use the requests module as follows:

import requests

row = [7.486, 3.277, 4.755, 2.354]

req = dict(id=1, method='score_reason_codes', params=dict(row=row))

res = requests.post('http://localhost:9090/rpc', data=req)

print(res.json()['result'])